In our What is on-page SEO blog post we explored the ways you can optimize your pages for search engines. In this blog post, we’ll be exploring Technical Search Engine Optimization (SEO) techniques you can use to improve your ranking.

Key Points:

Technical SEO includes robots.txt, canonicalization, sitemap.xml, and site speed.

Why technical SEO is important

Technical SEO is important because factors like duplicate content without canonical links, slow site speed etc. can hurt your marketing and on-page SEO efforts. Improving your site speed can help boost your overall ranking across your entire website.

With that in mind let’s dive into technical SEO practices you can implement on your website.

Robots.txt and technical SEO

A robots.txt file tells search engines' crawlers, like the Google bot, which URLs the crawler can and can’t access on your site. You will want to customize the robots.txt to file to fit your websites needs, but if you’re using a CMS like Silverstripe or WordPress there are some general guidelines you can follow.

Silverstripe robots.txt recommendations:

For Silverstripe we recommend the following in your robots.txt file

User-agent: *

Disallow: /admin

Disallow: /Security

Disallow: /dev

Sitemap: https://yourdomain.com/sitemap.xml

This tells crawlers to ignore everything under /admin, /Security, and /dev. These URLs do not need to indexed or crawled by Google as they are all specific to either CMS administrators, or Silverstripe developers. Note for the Sitemap you’ll want to make sure this matches the URL to your website's sitemap.xml. We’ll talk more about the sitemap.xml below.

WordPress robots.txt recommendations:

For WordPress we recommend the following in your robots.txt file.

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://yourdomain.com/sitemap.xml

This tells crawlers to ignore the wp-admin directory which is for CMS admins only. The 2nd rule does allow crawlers to crawl wp-admin/admin-ajax.php. which is used for frontend ajax requests.

If you’re using CMS other than Silverstripe or WordPress try looking up recommendations for your CMSs robots.txt file on Google.

Canonicalization and technical SEO

Canonicalization involves adding a “rel canonical” tag to pages containing duplicate content and is a way of telling search engines that a different URL should be prioritized and is the “original” version of that page. Here is an example of a canonical tag

<link rel=”canonical” href=”https://yourdomain.com/original-page” />

This is important for SEO because this can help the page you want to show on Google to rank better. Instead of Google indexing multiple pages and trying to decide which one to show for a keyword or keyphrase you can use the rel canonical tag to tell Google which page it should prioritize.

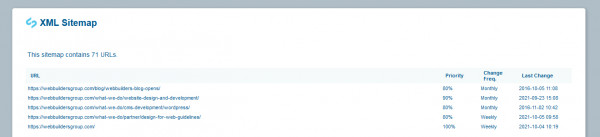

sitemap.xml and technical SEO

A sitemap.xml is a list of your website’s URLs (unless you’ve chosen to exclude them). This is great for crawlers as it helps them find and index pages on your website, especially those that may not have any internal links on your website. Below is a preview of our websites sitemap.xml

If you’ve just launched a new site, or haven’t done this for your existing site, you should submit your sitemap.xml to Google Search Console for two reasons.

- It will tell Google where to find your sitemap.xml so it can index your websites pages

- You will see if Google is having issues indexing your sitemap.xml.

Site speed and technical SEO.

This is likely the most challenging aspect of technical SEO. If you are a nontechnical website administrator you probably won’t be able to accomplish many of the requirements without the help of a web developer. So, what do I mean by site speed? Do I mean how fast your website can finish the 100m sprint. No, site speed is the time it takes for your website to load on a visitor’s browser. From the initial request (like when someone clicks the link for your search result in Google) to the time the page can be interacted with. Site speed is crucial to crawlers because Google ranks faster pages higher in its search results. It is also crucial for visitors to your site, because pages that load slow can frustrate users and cause them to leave the page.

Testing your site speed

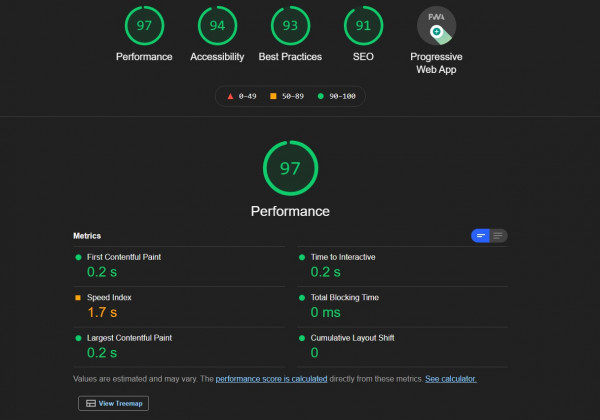

Before you can begin making improvements to your site speed its probably best to understand how your website is performing now while getting some recommendations from an automated tool. You can use the Google page speed insights or install Google Chrome’s Lighthouse tool.

Preview of https://webbuildersgroup.com lighthouse results

Above is a preview of the lighthouse results when ran on our homepage. Looking pretty good! There are some small areas of improvement but this is a score we’re definitely happy with! After you’ve run this tool on your own site you’ll see your scores and a number of recommendations depending on how your site is performing. I’m not going to cover those in this blog post, but if you’re looking for help understanding the recommendations or for a web developer who can implement them please give us a call or use the form on our contact us page.

Conclusion

If you’ve nailed down your marketing and on-page SEO efforts but haven’t examined your technical SEO, now is the time to do it. We hope this blog proves helpful. It’s important to remember that after making changes to your website you should be measuring your results on a regular basis. Checking your ranking on Search Engine Result Pages (SEPRs) and measuring your Impressions and Click Through Rate (CTR) in Google Search Console are a great place to start.